Summary:

This document outlines the process for configuring iSCSI in Vmware vSphere 4 and ESX 4 environment. This document assumes that the reader currently understands and knows their way around vCenter 4 using the Vmware viclient. This document also assumes that the user has the appropriate rights to do all the tasks required. This setup with the use of iSCSI is geared more for the HP P4500 SAN appliance but could be used in other environments. The creator of this document is in no way affiliated with Vmware or HP and is not responsible for any adverse affects, performance issues, or downtime that may occur by performing the steps in this document. In other words - “Use at your own risk!”

For HP P4500 Configuration - here

Requirements:

- vSphere 4

- ESX host with 6x nic ports

- 6x 1gigE nic ports minimum

- 2x 1gigE network switches

- multiple ip addresses (one for each nic to be used for iscsi, vmotion, & service console)

Create VMkernel Port Group:

- Login to vCenter with the Vmware vSphere client

- Select the ESX host then click the “Configuration” tab

- Click the “Networking” section

- Click “Add Networking”

- Select “VMkernel” then next

- Select the appropriate nic ports to be used for iSCSI traffic

- Name the port group i.e. iSCSI-# or iSCSI-1 then click next

- Enter the ip address and subnet. Edit the gateway if it needed then click next

- Click Finish

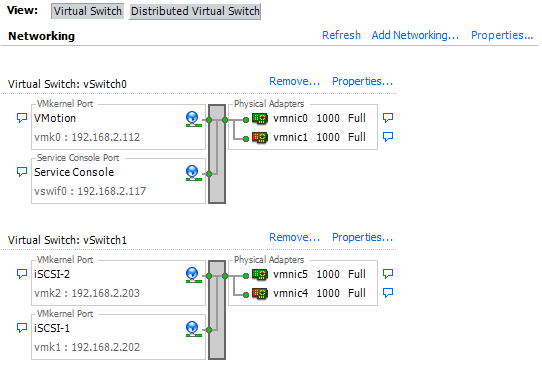

Standard vSwitch Configuration:

Each vmkernel port group will be configured with a single physical active nic port as shown below. All other nic ports will be set to “unused” by checking the “Override vSwitch failover order”, selecting the nic, then clicking the “Move Down” button. There should not be any standby adapters.

From the command line, bind both VMkernel ports to the software iSCSI adapter. The vmkernel# and vmhba## must match the correct numbers for the ESX or ESXi server and virtual switch you are configuring, for example:

- esxcli swiscsi nic add -n vmk1 -d vmhba33

- esxcli swiscsi nic add -n vmk2 -d vmhba33

Verify by running this command where both vmk1 and vmk2 should be listed.

esxcli swiscsi nic list -d vmhba33

Once configured correctly, perform a rescan of the iSCSI adapter. An iSCSI session should be connected for each VMkernel bound to the software iSCSI adapter. This gives each iSCSI LUN two iSCSI paths using two separate physical network adapters.

To achieve load balancing across the two paths, datastores should be configured with a path selection policy of round robin. This can be done manually for each datastore in the vSphere client or ESX can be configured to automatically choose round robin for all datastores. To make all new datastores automatically use round robin, configure ESX to use it as the default path selection policy from the command line:

- esxcli corestorage claiming unclaim -type location

- esxcli nmp satp setdefaultpsp -satp VMW_SATP_DEFAULT_AA -psp VMW_PSP_RR

- esxcli corestorage claimrule load

- esxcli corestorage claimrule run

It is important to note that native vSphere 4 multi-pathing cannot be used with HP P4000 Multi-Site SAN configurations that utilize more than one subnet and VIP (virtual IP). Multiple paths cannot be routed across those subnets by the ESX/ESXi 4 initiator.

Logical Layout:

theHyperAdvisor.com

[...] For Vmware ESX Configuration - here [...]

I did everything above except the command-line part for binding the vmKernel ports, yet everything appears to work properly.

Though in ESX 4.x Storage Views it says ‘Partial/No Redundancy.’

Must I do the command-line binding to get things working “better”??

It’s already in production, it seems to me I must move the VMs off each of 3 hosts and perform this step??

On our MSA2012i SAN ESX 4.0 Update 1 defaulted to MRU — is it better/safer to use round robin??

Thank you, Tom

The above command line for binding the NICs (vmkiscsi-tool etc.) gave me an error: Bad Parameter for the API, using the same line, with vmhba33 instead…I will leave well enough alone for now.

Yeah, I screwed up but I updated the post with the correct command.

@Tom :

Make sure u have the second nic in each iscsi vmkerenel group as an unused adapter NOT as a standby adapter.

Good luck

hi Antone or other

I am trying to folow the setup here, it goes fine until the command line part…

I have installed vSphere 4.1 CLI util but it seems i have gone blind og stupid?

How/where do i start the CLI util ??? it is not i “Programs” and i can’t seem to find any exe file to run…?

Can anyone help?

Regards

Carsten

You do the command line part in the service console.